MEEP FPGA cluster

System Overview

The MEEP FPGA cluster is based on Intel Xeon processors and Xilinx Alveo UC55 FPGAs, with a Linux operating system and Ethernet connection.

It has the following configuration:

1 login node (fpgalogin1) and 4 general purpose compute nodes (fpgac[01-04]), each with:

- 2 x Intel Xeon Gold 6330 with 28 cores @ 2.0GHz

- 256 GB memory distributed in 16 RDIMM x 16GB DDR4 @ 3200 MHz

- GPFS via Ethernet

- 1 TB NVMe mounted as RAID1

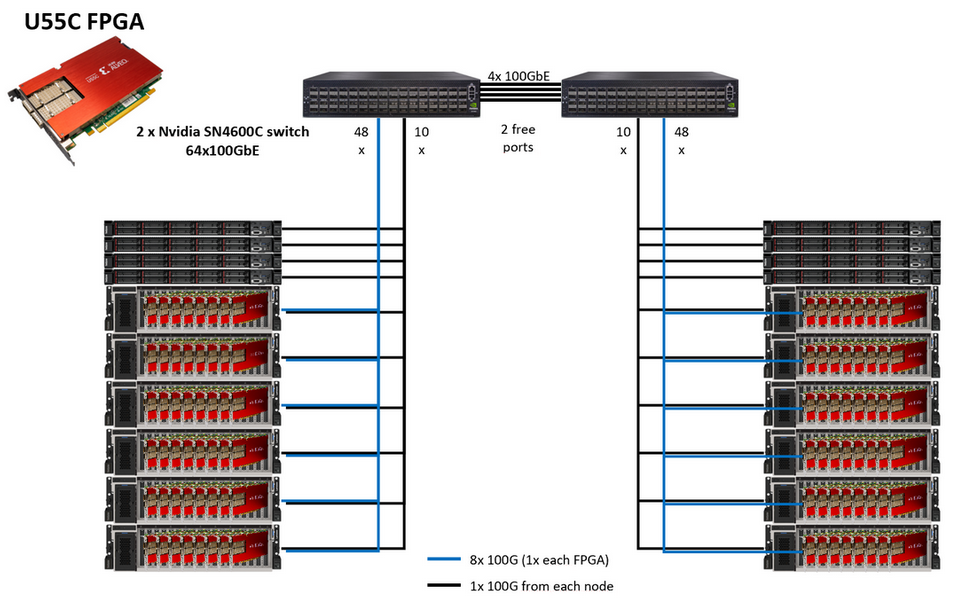

12 FPGA nodes (fpgan[01-12]), each with:

- 2 x Intel Xeon Gold 6330 with 28 cores @ 2.0GHz

- 256 GB memory distributed in 16 RDIMM x 16GB DDR4 @ 3200 MHz

- 8 Xilinx Alveo UC55 FPGAs (7.88 GB/s CPU-FPGA bandwidth, 460 GB/s maximum FPGA memory bandwidth, 2 x 100GBe interfaces/FPGA)

- NFS via Ethernet, for nodes and FPGAs.

- 2 TB NVMe mounted as RAID0

The operating system is Red Hat Enterprise Linux Server 8.4.

FPGA Software

Currently, the Xilinx suite is available by directly sourcing the environment needed for it. In the future, a module system might be implemented to facilitate the use of software. To load it, you can use one of the following commands to load xilinx module:

module load xilinx

For previous versions:

module available

module load xilinx/<VERSION>

Connecting to MEEP FPGA cluster

You can connect to the cluster using the login node:

- fpgalogin1.bsc.es

You must use Secure Shell (ssh) tools to login into or transfer files into the cluster. We do not accept incoming connections from protocols like telnet, ftp, rlogin, rcp, or rsh commands. Once you have logged into the cluster you cannot make outgoing connections for security reasons. If a connection to the outside is needed (i.e github), you need to do it from login 0 of Marenostrum (mn0.bsc.es).